Dynamics

of Multiple Improvement Efforts:

The

Program Life Cycle Model

|

Rogelio Oliva Universidad Adolfo Ibáñez Balmaceda 1625, Recreo Viña del Mar, Chile E-mail: roliva@uai.cl |

Scott Rockart Mass. Institute of Technology Sloan School of Management, E52-511 Cambridge, MA USA

02139 E-mail: srockart@mit.edu |

Introduction.

This paper is part of a series of studies on the subject of continuous

improvement programs written by members of the System Dynamics Group at the

Sloan School of Management at MIT. The research is supported through funding by

the Transformations to Quality Organizations program of the National Science

Foundation and by the partner corporations (Jones,

Krahmer et al., 1996; Sterman,

Repenning et al., 1996).

The purpose of the entire series is to provide the basis for a dynamic

framework, and formal models, through which to understand the key determinants

of success or failure of quality improvement efforts. This particular study

provides an overview of dynamics that emerge from deploying multiple

improvement programs.

Firms frequently undertake multiple improvement programs

simultaneously and in sequence. To understand the implications of implementing

multiple programs, a set of dynamic hypotheses was developed from individual

program histories and the interplay across programs at one research site. The

core dynamic hypothesis and structural elements identified are captured in a

system dynamics model to explore the improvement programs' life cycle (PLC).

While the PLC model is being developed to help explain broad industry phenomena,

it is primarily grounded in the experience of one of our research partners that

launched more than thirty distinct improvement programs over the last fifteen

years. See Oliva, Rockart

and Sterman (Forthcoming)

for a full description of site, research method, and findings.

Dynamic Hypothesis.

Program histories from our research site have clarified the

importance of a few key resources, and the central role of employee perception

of program value, in successfully launching and sustaining improvement

programs.

Limited resources for improvement.

Three basic resources appear to be needed to sustain an improvement program.

Programs that lack any one of these three resources – employee time, managerial

time, and skill with program tools –

have shown to be unlikely to succeed.

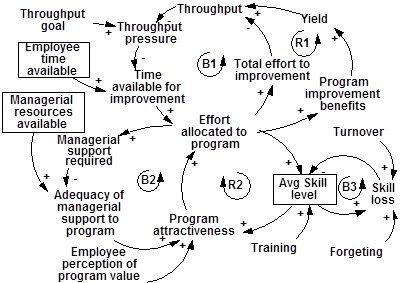

Employee time available for improvement program effort is

limited by the total available time and by the effort needed to achieve basic

work objectives such as production throughput. If employees attempt to allocate

too much of their time to improvement, throughput will drop bringing greater

pressure on employees to reduce the time spent on improvement activity (loop B1

in Fig. 1).

Managerial time is another limited resource, only a portion

of which is usually available for improvement activity. Managerial support of

programs involves allocating time to understand, demonstrate support for, and

clear obstacles to programs. Insufficient managerial attention can act to limit

the effort allocated to each program by reducing the attractiveness of program

involvement. As more effort is allocated to programs the available managerial

support may become inadequate, lowering program attractiveness and constraining

the growth of effort (B2). Time resources can be aggressively managed to help

programs succeed. According to Deming (1982)

the ideal of continuous improvement programs is to liberate resources for

improvement through improvement of the process, creating a continuous and

self-sustaining mechanism (R1). However, in the push for improved financial

performance, management may be tempted to convert freed employee and managerial

time into cost savings, thus cutting short the reinforcing process of

improvement.

|

Fig.

1 - Resource limitations on improvement programs |

The third key resource is the base of program-specific skills

held by employees. A program becomes more attractive and opportunities to use

the tools become more apparent as employees gain greater capability and

confidence with the related skills. Skills are increased through experience

with the program's tools, creating a reinforcing process that helps sustain an

improvement program (R2). Nevertheless, the skill creation process also needs

to be managed. If training takes place too early, employees not only begin to

forget but may become cynical about management support for the program.

Additionally, normal employee turnover will strip an organization of its

experienced personnel making continued training critical.

The motivational driver. In

addition to skills, managerial support, and available time, employees must

believe in the value of a program to make them truly effective participants in

improvement efforts. Training and managerial support appear to be able to

create temporary excitement. Once that excitement begins to fade it must be

replaced by other sources of motivation. Command-and-control relationships can

provide the motivation for programs that are easy to monitor, but are unlikely

to work with programs where employee participation and contribution are more

difficult to assess. Even where command-and-control relationships are possible

to enforce, they cannot be successful in the long-run as it makes the

initiative dependent on managerial supervision. A common theme within stalled

improvement efforts is that those engaged in the program were unable or

unwilling to see that the efforts were sustained once the program champion was

removed. Furthermore, employees who work by following orders may never feel the

need or take the time to truly understand the underlying purpose thereby

limiting their effectiveness.

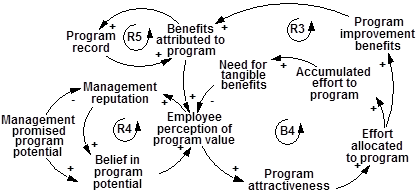

The prevailing alternative to command-and-control

enforcement appears to be the conversion of initial excitement with a program

to a long-term results-based belief in the program's value (R3 in Fig. 3). Once

effort is allocated to a program, employees begin to look for tangible benefits

that can be attributed to the program. As effort accumulates over time, the

employees begin to trust their own experience with the program more than

statements made by managers or trainers. If the benefits cannot be observed,

then employees begin to lower their perception of a program's value and their

motivation drops accordingly (B4 in Fig. 3).

Model Scope and Structure.

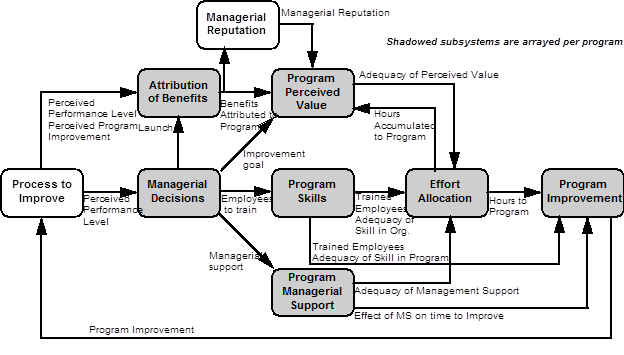

The PLC model was designed to provide insight into the

pattern of program commitment and success observed at the site. The modeling

effort has helped the authors to understand why programs gain the number of

adherents they do when they do, and why they grow or shrink at different time

periods. The model consists of 216 equations – of which 41 are state variables

and eight are table functions – with 59 system parameters. Most of the model

structure is arrayed to represent the multiple improvement programs. The model

includes considerations such as the potential value of a program, the level of

management support it receives, the reputation it builds for efficacy, business

pressure, and the influence of contemporary programs. The model does not

include market forces, support infrastructure, nor job

security concerns. The model is organized around two decision-making groups.

Managers determine when to launch programs, how much support to give a program,

how many people to train, and state an improvement goal for the program.

Individual employees then perceive that support, and combine it with other

factors, such as their skill level and time available, to decide how to

allocate their time among efforts. Figure 2 shows a subsystem diagram of the

model’s structure and its main variables. The model has been turned into a

flight simulator for players to take the managerial role and preliminary tests

were performed with a group of managers from our partner corporations. Some

insights from the model building process, simulation results and the gaming

sessions are described in the following section.

Fig.

2 – Subsystem diagram of PLC model structure

Findings.

Programs are initiated for a variety of reasons. Often firms

launch programs when they perceive that firm performance is below that of a reference

group, when the rate of performance improvement is declining, or simply when

employees encounter a persuasive methodology for improvement. Although we have

managed to replicate the macro-behavior of program launches from simple

behavioral policies, we are continuing to explore the reasons for program

initiation and the ties between why programs are launched and how likely they

are to succeed. The experience of our research site and the results of the

model simulations have lessons not only for sustaining individual programs but

for managing the interrelationships among multiple programs. Significant

complementarities and competition across programs are apparent.

Inter-Program Complementarities. It

is easy to overlook the benefits that a program indirectly provides to the

organization by supporting later improvement efforts. Reflecting on the

individual program histories it is possible to extract instances where one

program, eventually successful or not, benefited from previous efforts in two

dimensions. First, the tools and skills learned, and mindset changes achieved,

from one improvement program are often the same ones needed for other

initiatives. Second, complementary bodies of knowledge and information specific

to the company or task that are created during one effort are often used to

carry out later programs.

Inter-Program Competition.

Beyond the obvious competition for limited resources to sustain an initiative,

we identified three interaction dynamics that affect the overall improvement

rate.

Fragmentation

of effort and attention. When a company takes on multiple

programs it may find that it has people working with vastly different mental

models. This "Tower of Babel" effect leads to frustration and reduces

program effectiveness. One obvious solution would be to train virtually all

employees in all programs. Simulation results, however, show that this strategy

results in low skill levels and therefore minimal accomplishment in every

program. This dilution of skill and understanding is evident in the lack of

clarity frequently expressed by people who had been trained in several programs

simultaneously.

Cynicism

and erosion of management’s reputation. Initial participant motivation relies on the perceived potential

of an improvement program. The perception of potential for a program is

normally created by management through improvement promises and goals. When programs have a history of failure, management’s reputation

and ability to build a strong initial perception of program value declines.

With no expectation of improvement results, employees tend to ignore programs,

thus fulfilling the low improvement expectation and eroding management’s

reputation even further (R4 in Fig. 3). Management’s inability to generate

initial motivation for programs results in a series of program launches that

are not taken seriously by employees – “the program of the month”.

|

Fig.

3 - Interactions Among Programs |

Competition

for credit. Continued

participant motivation relies on the ability to attribute recognizable benefits

to individual programs. The attribution process, however, is likely to be

imprecise and biased when multiple programs are involved. There are at least

three reasons for attribution errors. First, at an aggregate level, the total

perceived improvements are likely to be less than the actual improvements.

Since a high-level scan of operations can only compare the current performance

level to a prior performance level, managers can only evaluate the net change in problems. However, new

problems are introduced in accommodating new products and adopting new

technologies. By looking only at the net

changes the improvement benefits will be underestimated. Second, the benefits

from a program are likely to be perceived with a delay. Since one way people

determine causation is proximity in time (Hogarth,

1980),

these benefits are likely to be attributed to later programs that are enjoying

high visibility during their initiation phases. Third, because of saliency

effects, historically successful programs will be attributed with the benefits

achieved by programs that have yet to build their reputation (R5). This

underestimation and biased attribution of benefits reduces the strength of loop

R3 in building motivation.

References

Deming,

W.E. 1982. Out of the

Crisis. Cambridge, MA: MIT Press.

Hogarth, R. 1980. Judgment and Choice. Chichester,

UK: John Wiley & Sons.

Jones, A., E. Krahmer, R. Oliva, N. Repenning, et al. 1996.

Comparing Improvement Programs for Product Development and Manufacturing:

Results from Field Studies. In G.P. Richardson and J.D. Sterman (Ed.), Proceedings

of the 1996 International System Dynamics Conference Vol. I, (pp. 245-248). Boston, MA.

Available: http://web.mit.edu/jsterman/www/SD96/Field.html.

Oliva, R., S. Rockart and J. Sterman. Forthcoming. Managing Multiple Improvement Efforts. In D. Fedor and S. Ghosh (Ed.), Advances in the Management of Organizational

Quality Vol. III. Greenwich, CT: JAI Press.

Sterman, J., N. Repenning, R. Oliva, E. Krahmer, et al. 1996.

The Improvement Paradox: Designing Sustainable Quality Improvement Programs. In

G.P. Richardson and J.D. Sterman (Ed.), Proceedings of the 1996 International System

Dynamics Conference Vol. II, (pp. 517-521). Boston, MA. Available:

http://web.mit.edu/jsterman/www/SD96/Summary.html.