Developing a Meaningful

Systems Investigative Capability

A Systems Process

Dr. Keith K. Millheim and Dipl.-Ing. Thomas Gšbler

Getting an organization, group, or individuals to buy - in to a system thinking and/or system dynamics way of doing things always face the hurdle of "grasping" some type of paradigm of: "What is system thinking" and to a lesser extent what is system dynamics from a value added point of view. This is particularly acute in organizations or companies heavily dominated by analytical disciplines like the oil and gas industry and engineering organizations.

People drawn to system

thinking or system dynamics assume the concepts, ideas, paradigms of system

thinking and system dynamics are easily understood by others and that all is

needed is a little help from some system thinking literature, workshops, or

systemsí gurus.

After working in the

engineering community for nearly thirty-five years, in large oil companies,

smaller engineering consulting companies, and as Director of a University

Engineering Institute, experience shows it is extremely difficult to start and

sustain any type of system thinking initiative because the very individuals who

could champion an initiative or authorize it have no idea what "it"

is.

And herein lies the

dilemma. You need people knowledgeable in the technology of system thinking to

activate the practical use of the technology within their respective

organizations or companies. Yet, most of these individuals do not have the

background training on basic knowledge about the breath and depth and use of

the various aspects of system thinking to make an economic decision to commence

some type of measurable value added initiative.

Over the years the

author has tried various approaches to break this barrier of "What is

system thinking and what value is it to me." This paper presents a simple

systems process which seems to offer some promise.

The five milestones in

the process are as follows:

1.

Identifying

a problem of significant value-added proportions that leads itself to system

investigation that "hooks" some customer(s).

2.

Building a

systems investigation team and supporting community that can identify the key

controllable, influencing, and non-controllable variables.

3.

Having a

sub-process to identify the information needed and to formulate the beginnings

of a system structure.

4.

Having

another sub-process to make the transition from the soft system investigation

to the hard system dynamics modeling

5.

Evolving a

value added solution or product that the customers (management and/or value

added users) are prepared to buy and give credit.

Identification of a Problem

The first and most critical

step to start a meaningful systems initiative is to identify a value added use

for the system technology. This presupposes someone is investigating various

problems that might be amenable for a systemsí approach. Wrong! Most

organizations and companies are stuck just in keeping the machinery running.

Over ninety-five percent of the organization is involved in "the

doing" part of the business or the implementation. Years ago Millheim (1)

studied the paradigm of how engineers or "analytical types" do their

work. Even though this was written for the Petroleum Engineering community it

also applies to most other analytically driven communities. From this work it

became apparent most people cannot visualize problems or systems in such a way

to see a value added approach that might be an application for soft or hard

system technology. Millheim discusses the investigative engineer who looks for

value added problems to solve. Without individuals empowered to investigate

(who, by the way, need investigative skills) meaningful problems remain

invisible to the management, who find comfort in reinventing the same old

problems or complexity, disregarding the lessons of learn curve theory.

Therefore, it is the

systemsí person first challenge to discover a problem that could

"hook" the customer on gambling with system technologies. The

challenge is how to be a "problems detective" with a limited amount

of exposure time to those who might really have this knowledge.

There must be a

strategy.

Usually as problem of

significance to one person is not a problem of significance to others. And in

many cases the actual person who processes the problem, owns it, and is

reluctant to give it up. It would mean (imply) he or she was impotant to solve

the problem. Another difficulty in unearthing meaningful problems to attack is

the paradigm held by many persons: if this problem has been a problem so long

how can "this systems stuff" do what other approaches tried and

failed. It is a simple question of risk.

What the authors

finally evolved was an iterative process of gathering groups of individuals for

the specific purpose of finding a problem or problems that if solved would

impress or show management the practical use of system technologies. At first

these problems are very macro almost religious. After politely rejecting all of

these, the groupís granularity will start to emerge. For the system

investigator this is critical because it signals the level of a problem any

group is prepared and capable to handle.

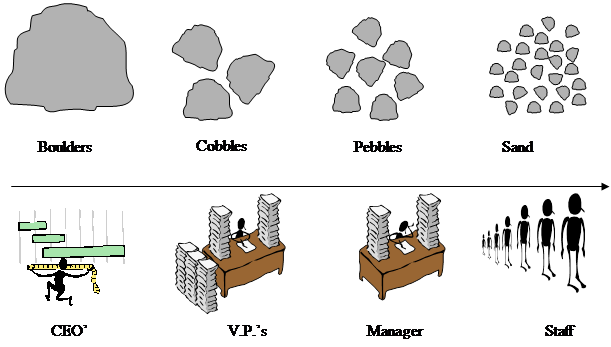

One brief explanation

about granularity ( a term we borrowed from our earth science brethren). Fig.1

shows the granularity for most organizations. You have the boulders. These are

CEO type problems and are the most difficult to get commissioned because you

need the CEO and his immediate reports, to find, to agree, and to risk on some

problems. Also, they need to find time, which is near on impossible. The next

size could be called cobbles, smaller than boulders but bigger than pebbles.

This size could equate to the vice presidents and their reports. Next would be

pebbles (managers) and their sand grains (supervisors and below).

The groups granularity

always define the doable and potentially value added problems. If the group

goes above its granularity (pebbles wanting to solve cobble size problems) they

will immediately hit the hurdles of trying to solve problems where they have

little or limited knowledge or power over the controllable variables of the

system. This point of view of the system under investigation makes or breaks the

problem initiative.

Experience has

shown the problem to investigate must have:†

(1) The right granularity, (2) have more than one person who agrees it

is a value added problem, (3) there is a good possibility to gather information

about the problem (system of interest), (4) there are enough people who possess

knowledge about the system to work on the problem, (5) and most important,

there are enough resources (people, time and money) to attack the problem with

some probability of conclusion.

If all of the above

criteria are satisfied the first milestone of a system initiative is achieved.

Building a Systems Investigation Team

A systemsí

investigation team (if successfully organized) usually is spawned

†from the right granularity of a problem. To

test the composition (and granularity) of a given problem the team should

demonstrate expert knowledge about the controllable variables, influencing

variables, and non - controllable variables affecting the system.

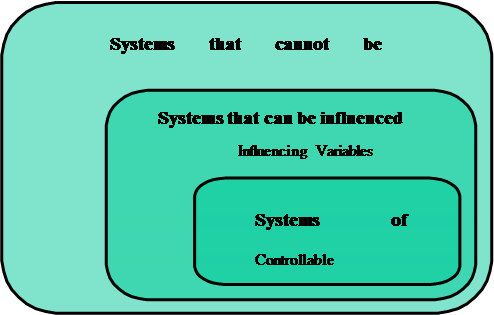

Before going on it is important to explain the concept of variable types: (1) controllable, (2) influencing, and (3) non-controllable.

∑ The team must recognize that the problem they

chose, should put them in the pilots seat to fly the system. If they are a

passenger or a flight controllers, the problem solution or value added is

questionable. Therefore, the idea of controllable variables means the team has

enough controlling power to steer or manage the system as to alter the outcomes

directly.

∑ Influencing variables are the variables that team recognizes is controlled by the next level or levels above them (maybe the cobbles for the pebbles). Usually the influencing variables should focus on one level above the system of interest. For example, a company transporting oil by tankers has the control on the tankers they own, size of tanker, and choice of market. However, they cannot control whether a given harbor wants single or double hulled vessels. But they can influence the policy of the harbor management on this issue.

∑ Uncontrollable variables are key variables

exogenous (outside) to the system that are recognized by the team as key

"for the system". Usually a good test for the key uncontrollable

variables is the "scenario test". If a key uncontrollable variable

can be varied (low/medium/high) like oil price and greatly affect the system of

interest, yet the user has no control over oil price, it is a critical

uncontrollable variable.

If a systemsí

investigation team can clearly delineate these three variable types they are on

the way for beginning the definition of a system structure for the problem of

interest. For example, if Saudi Arabia was doing a systems investigation on

world oil demand, they could correctly assume that one of their controllable

variables that affected oil price was their oil production. Australia could not

make this choice, as could Exxon or Royal Dutch Shell. In fact, the previous

three would never influence oil price. Maybe Nigeria, Iraq, Kuwait and Russia

could influence. But for most countries and oil companies oil price is a

critical uncontrollable variable. Fig. 2 summarizes these variable types.

Experience has shown

that once a general problem has been identified and the team starts

investigating the variable types, many times the problem (system) of interest

is totally changed or modified. For it takes a while for a team to "home -

in" to an airplane they can fly. Critical to this milestone is to keep a

team working together long enough to visualize and map the system of interest.

Most of the time the people and time resource diminishes, leaving one to three

individuals the task of trying to keep the airplane in the air. Sometimes it is

enough but most of time it is not and the initiative is again lost.

Pre-warning management

of this resource fallout danger is mandatory. Critical mass is essential in

this early system formulation phase. And the commissioning management needs to

be made aware of the milestone.

Information Needed and System Structure Development

Many value added

problems are identified and started with extreme gusto, just to stall and crash

because the team either (A) lacks the collective knowledge of the problem to

identify the key variables and required information, or (B) the group is

knowledgeable but for one reason or another they cannot obtain or have access

to the key data sets.

A good example of this could be an oil company which wants to start operating in a country like Libya. The team might have a good sense of the key variables and basic system structure, but access to accurate data sets in Libya might be impossible to obtain, leaving the investigators to guess at the information.

Without a doubt most

organizations totally disrespect the importance of critical data and the time

necessary to gather and organize the data so that is useable.

An example of such a

situation was illustrated by a company which wanted to improve their oil and

gas exploration results. One of the critical data sets was the success per year

of the competing companies for finding oil and gas. The management of the

investigating company assumed it was impossible to get this information and

therefore why go on with the study. When showed that all the information was

public domain knowledge and all it would take was "time and effort"

they could not believe it, because their own experts for years claimed this

data was unavailable or proprietary.

The trick for keeping

a problem initiative going is cleverness of the team to play detective,

unearthing these data-sets. Herein comes the power of the prototype system

dynamics model in identifying the sensitivity of the critical variables and

required data-sets, as to pre-prepare the team for the resources necessary

(time, people, money) to handle the data acquisition milestone. Generally

speaking, if the team has no experience in this data detective work, they need

"wise" assistance to guide them how not to gather "too

much" and yet gather enough quality data. A major goal is to find a

Ēdata-doormanĒ in the organization who knows where to go and who to see, to

find the data.

It is not surprising

that most "practically successful" system structures are the right

mixture of key variable identification and the appropriate data sets that

support the structure. Many models fizzle and have no impact because of

oversimplification or overcomplexity with too many guesses or assumptions.

Practical experience

has shown at least one or more individuals must clearly be accountable for

doing the data detective work and should be in a symbiotic relationship with

the systemsí modeler.

Transition from Soft to Hard Systems

Again and again many

systems (problem solving) initiatives fall apart because the commissioning

managementís have little of no knowledge of hard systems and are not prepared

to capitalize an "unknown thing" like a system dynamics model. By-in

for the soft system mapping, even building same cute little demo system

dynamics models seem to be doable if the first three milestones are reasonably

well done and some useable results seem eminent. But to commission a useful

management flight simulator (MFS) requires a leap of faith that most

organizations are not prepared to make.

Many system dynamics

oriented initiatives do not appreciate the skepticism of managements,

particularly ones composed, analytically skilled and trained individuals, when

it comes to computer derived models, especially when it deals with human

systems.

To bridge this

milestone the authors have learned to rely heavily on "analog

marketing", that is showing real examples of hard systems that the

customers can relate to. By giving an insight to a similar problem that was

useful and produced meaningful value added results is the first step in

reducing the "model prejudice" and risk adversity most managements

have.

Without a doubt this

milestone is the most difficult to overcome with an uneducated management about

systemsí technology. Sometimes it is necessary to try and get the management

and consort to experience the basics o system thinking via some type of

training and experience with MFS.

What the authors

believe is critical to overcome this marketing barrier is to hook and train the

customer to do as much of the modeling work as possible. Without this

commitment it becomes a challenge of securing enough resources from management

to build the system of interest, test it, and try to obtain some value added

results. Again the importance of the problem selection is tantamount for

demonstrating this value added.

Evolving the Value Added Product

It all has to do with

credibility.

A problem is

identified that has the potential to add value: give direction to a practical

(doable) strategy, overcome a problem that has defied previous attempts to

solve it, give insights to management for a paradigm jump or shift, identify

opportunities invisible to the competition, etc. Yet most of the time the

fulfillment of managementís expectations are not met, and unless there is a

powerful champion for keeping the systems initiative going, it fades away as a

"tried and failed" attempt at something new.

Remember, hooking an

organization to gamble people, time and money must show value added. Many times

system initiatives are started, promises made without identified results.

Key to hooking and

keeping a systemís initiative going in a company is the honest negotiation of

what will be achieved if management agrees to commission the initiative. For

example, sometimes it is better to seek a practical smallish problem that has a

high probability of showing value added results, than going for a huge complex

problem which is replete with risk.

Solving a problem that

clearly shows the cost benefit from using a systems approach builds

credibility, and credibility is everything for securing managementís trust to

continue with anything.

The authors believe

the complete honest portrayal of the five critical milestones is essential to

give an uninformed management an idea what they can expect.

Analytically trained

individuals generally have a hard time visualizing the concepts of system

thinking and system dynamics and relate to analogs they are comfortable with.

What this paper demonstrates is a way for the seller (of system technology) to

sell to buyers, who because of their background must be approached differently.

They might not understand system technology but they do understand processes.

Fig.3 clearly

illustrates the previously described process with the five major milestones.

However, the process should be viewed as an iterative process where the

constant search for a value added, doable problem is the major strategy. If an

appropriate team cannot be formulated to do the problem, either a new team

should be created or a new problem found. The dotted line reflects this return

to the problem definition. The same criteria pertains to importance for

finding, gathering and preparing data (information) necessary for system

analysis. If there is any doubt, the problem or expectations of results should

be re-negotiated. Similarly if the resources are not available to do the model

(milestone 5) the problem should be restructured or completely changed or not

even done. Sometimes it is better to say "no, we canít do an appropriate

system analysis with the available resources." Honesty always wins.

Lastly, if a value added result cannot be agreed to, donít do the problem.

Everybody looses, and the one chance for credibility is lost.

Like it or not many

organizations deal with practical problems they crave solutions for. System

technology is a powerful tool that can help solve these problems, but to break

the "What is systems" barrier, you need a strategy. This is one that

the authors have tried and seem to have achieved some success with analytically

based organizations. We are sure there are others.

In the end it takes

persistence.

References:

Millheim,

Keith K.: ĒInducing the Investigative ProcessĒ, Journal of Petroleum

Technology, Sept. 1991

†††††††††††††††††††††††††††††††††††††††††††††††††††††††††††††††

Fig. 1 Concept of Granularity

Fig. 2 Variable Types

Fig. 3 A Process for Commencing a Systems

Initiative in an Analytical Driven Organization